PRECISION LIVESTOCK COUNTING

Animals make it hard for farmers to count them when animals are moving quickly and in close quarters. Even slight discrepancies can mean big losses for agribusinesses.

|

40K |

100K+ |

99.9% |

Click to Learn More

IDC Market Note: Customer Focus Makes for Computer Vision Success

Plainsight’s blend of visual AI and data science produce computer vision success where other

providers fail. IDC explores this and the broader computer vision landscape in their new Market

Note, including:

- The rapidly evolving landscape of the Computer Vision market poses challenges for providers in delivering effective solutions.

- Success in Computer Vision initiatives hinges on prioritizing customers’ end-to-end business processes.

- Plainsight has a proven history of prioritizing customer satisfaction, contributing to its achievements.

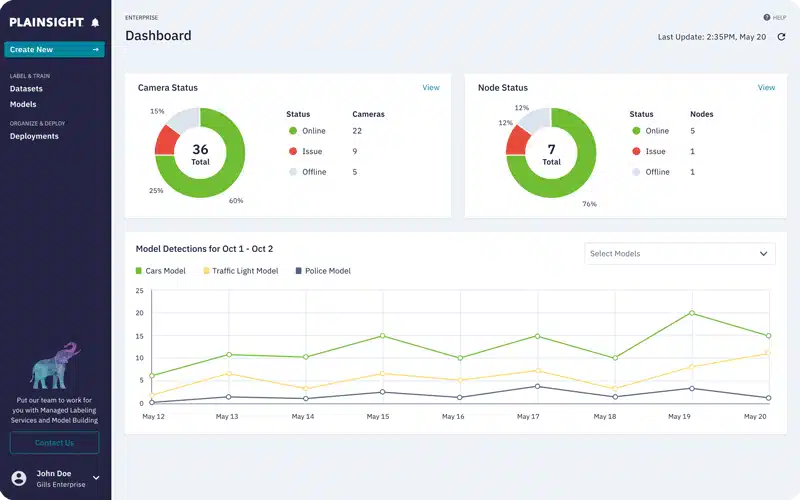

Vision Intelligence For Your Business Starts Here

Turning what you see in your environment into actionable intelligence is a must-have for any business.

Captures intelligence from any camera in your environment

Future-proofed against AI ecosystem changes while easy to set up

Connects to existing dashboards and alerts to avoid data silos

Doesn’t require AI expertise, programmers, or consultants

Meets privacy, security, and compliance requirements

Scales to the entire Enterprise

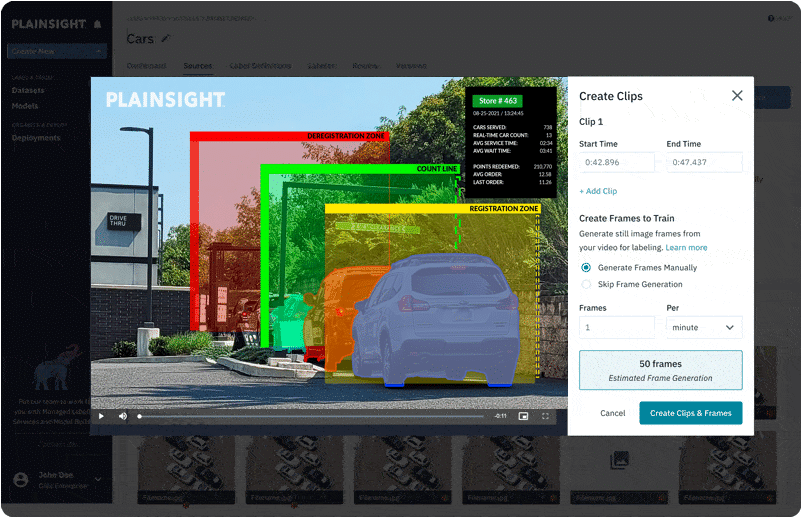

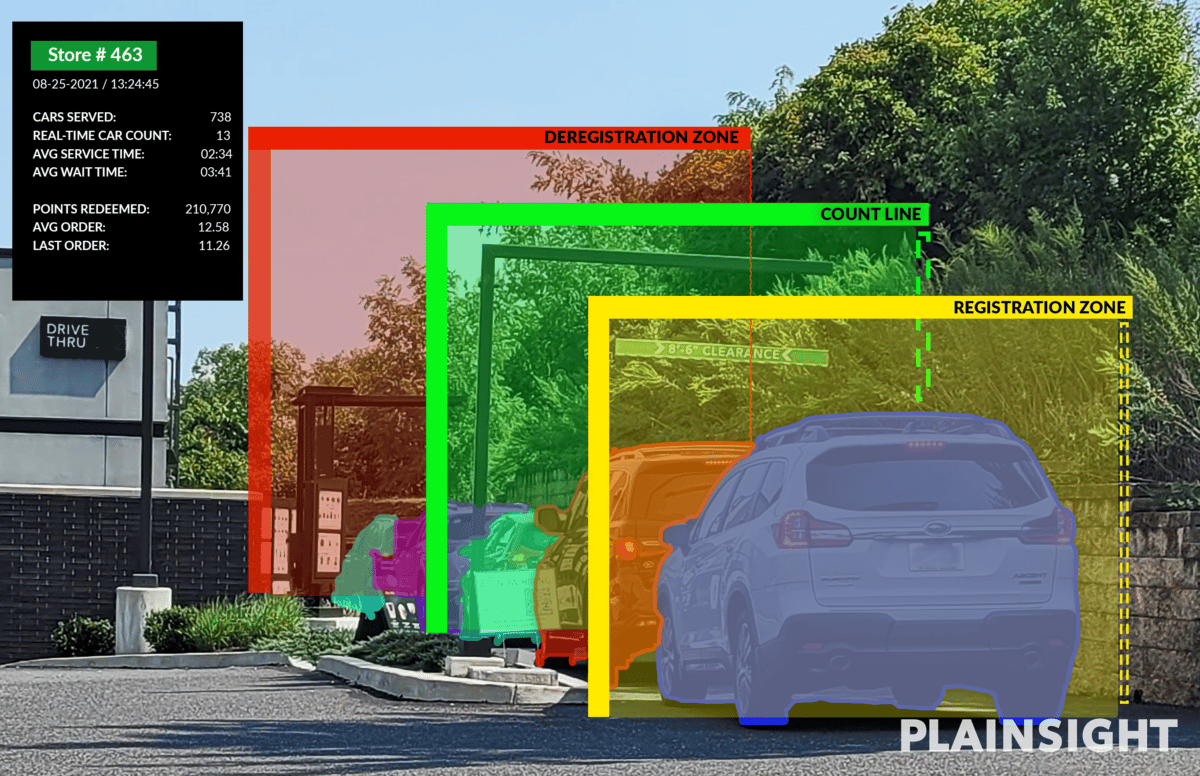

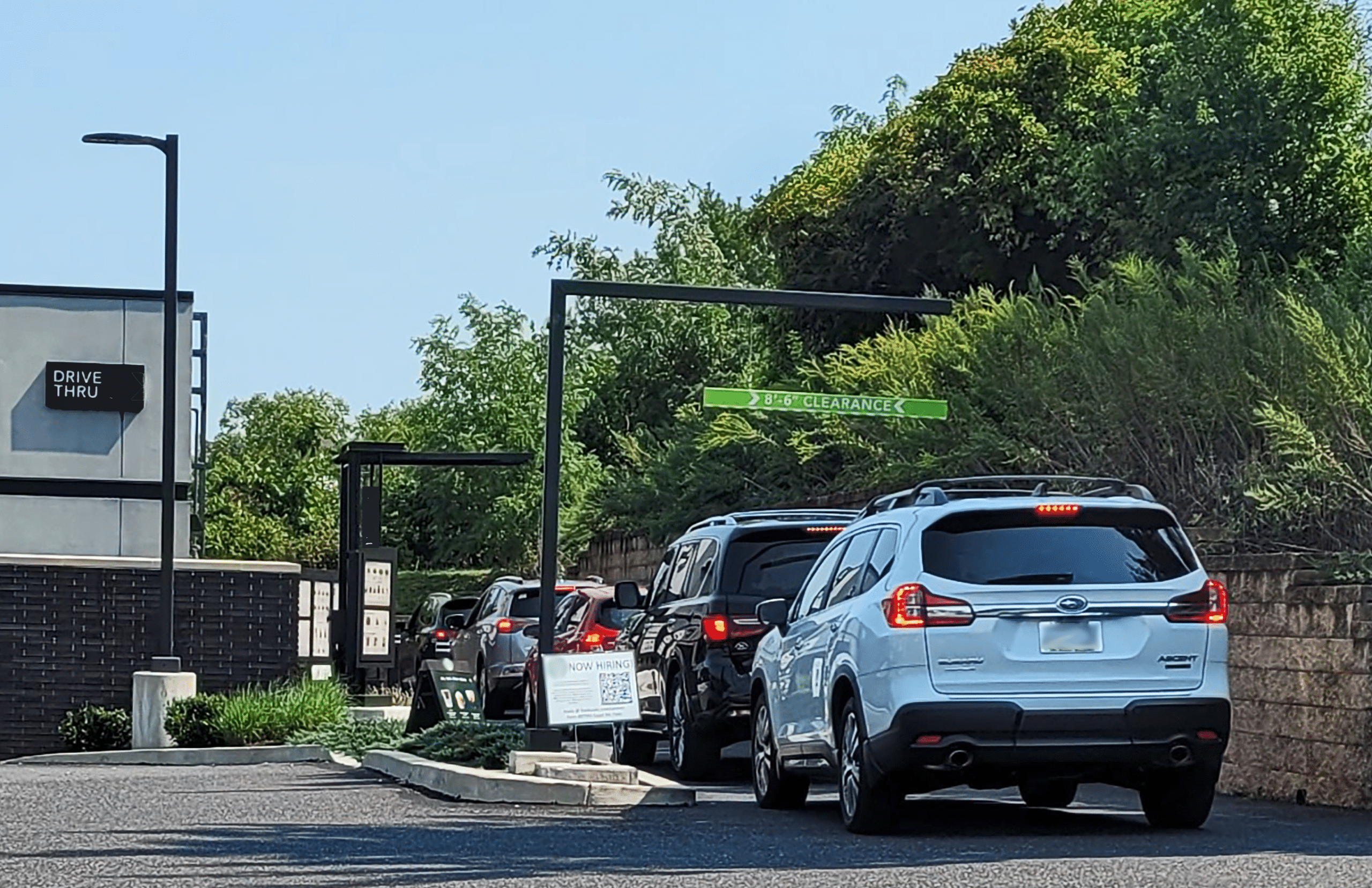

See Insights About Your Customer Experience

Solve business problems using Plainsight Visual Intelligence Filters to understand consumer behaviors in a naturalistic way.

Trusted by Partners